How Egnyte Got Its Engineers to Use a New Configuration System

You can build the best system in the world, but it won’t matter if no one uses it.

That might sound obvious, but it’s often overlooked. Getting your users to buy into a new system is not as easy as flipping a switch. It takes planning, initiative, and reinforcement to make sure your software is broadly adopted.

I recently wrote about the challenges of configuration at scale, and how the engineering team at Egnyte rebuilt its internal configuration system from the ground up to address our rapid growth issues. Today, I’d like to share how we designed it so everyone used it.

Identifying the Stakeholders

Once we determined the need for a new configuration system and understood the basic principles of its operation, it was time to add the bells and whistles. Our goal was to make sure we actually liked to use it and that we didn’t hear any grumbling from our internal users.

To do that, we had to make the system friendly to three different users:

- Developers

- Site reliability engineers

- Product managers

Developers, Developers, Developers!

We knew that without developer advocacy, things would just remain the same. Sure, we could create the new system, spend the DevOps budget, and make everything real. However, the phrase “if you build it, they will come” does not automatically apply to projects like this. Developers have to want to use it. They have to love it. And for that to happen, the bar to introduce new configurations has to be extremely low.

To get there, we decided to register new configuration keys directly from the product code. Think Spring JavaConfig vs. XML. Only the gap was even wider and the challenge harder as this type of configuration persisted into a database and needed to be updated when the code was updated.

We decided to encapsulate the entire configuration as a Java class. This includes the key name, default value, which value validations should be executed on change, and similar items. From there, a remote call is made during the node startup to register those keys in the configuration service.

Introducing new keys was as easy as implementing an interface and filling in the “getName()” or “getDefaultValue()” methods. In a matter of seconds, developers could now create the class for such a key, call “task_feature_enabled.get(customer_id)” from within their code, and be assured that the entire bundle would just work.

This was a great first step to making adoption easier, but then came the dreaded A-B-A problem.

ABA, or Traveling Back in Time

Consider the following timeline:

- Server X and Server Y both have version 1 of the code. That version includes a key with default value of A.

- Server X is redeployed with version 2 of the code. That version modified the key default value to B. On startup, Server X registers the new default value for the key and makes it available to all consumers, including Server Y.

- Server Y is restarted before it’s redeployed. During its own startup, it registers the default value it found in its version 1 code, which is A.

Oh no. We just accidentally rolled back the change we made. This isn’t just non-developer-friendly, it’s the type of things that make developers say, “I’m never going to use this again.”

To overcome this issue, we had to remember key signatures. The signature is a digest (hash) composed of all the key configuration fields. On registration, we consider the key only if the digest was never seen before, e.g., not found in a special key digest collection. In the example highlighted above, the registration called by Server Y in t=3 is simply ignored.

However, that created another issue: how do you explicitly go back when you actually want to? This was easier to solve now that we had the digest mechanism. We simply let developers override the automatic digest calculation and supply their own digest, preferably in the “date-time-ticket-number” format. Less than perfect, but these cases were few and far between.

Pulling the Emergency Brake

No matter how well you code, sometimes you have to quickly revert a decision you made due to unforeseen consequences, i.e, quickly disabling a feature.

Some configuration systems are notorious for impeding such efforts. Often you have to repeat the revert operation for each customer that has the feature. You also have to space the operations far enough apart so you don’t overload the system with “modify” requests. And when the emergency is over and you need to re-enable, you’re left digging through audit logs to figure out who had the feature enabled in the first place.

Luckily, when we designed this configuration system, we had already dealt with these types of issues in the past. Well, not so lucky for having gone through those past incidents, but at least we knew that an “emergency brake” is a must-have.

We introduced the concept of overrides in the system. Normally, a value set at a more specific context (e.g., customer) would trump a value that exists in a less specific context (e.g., customer group). An override value, specified with a “force > 0” integer ignores this principle and instead favors the value with the highest force level first. More specific contexts are then preferred between all values of the same force level.

This enabled us to quickly react to issues. We could create an override for the whole region, if needed. This would fix the problem, and then delete the override, letting the original values at more specific levels take precedence again.

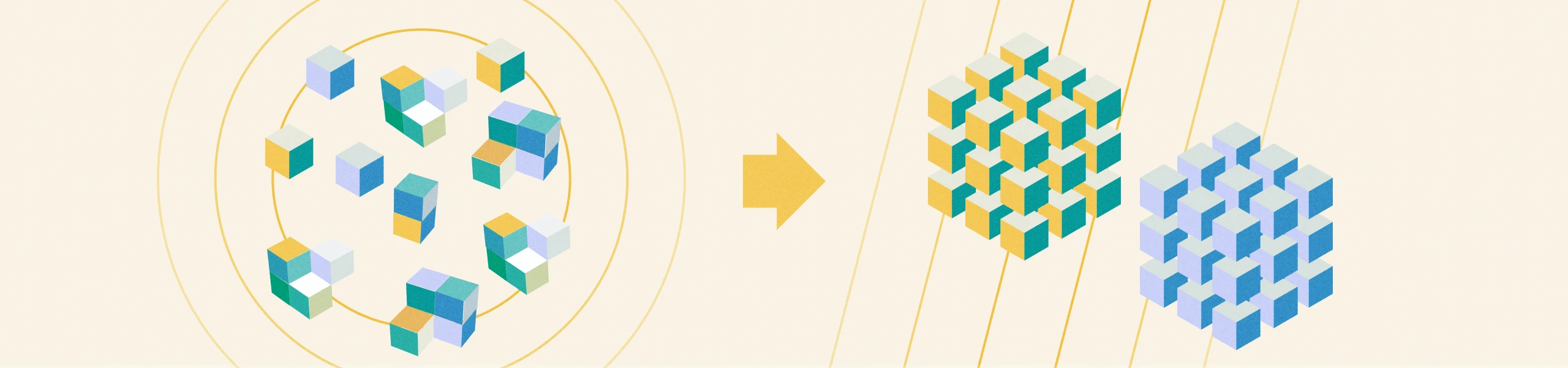

The Circle of Life

Many of the configuration items we introduced were designed to be temporary in nature, such as feature flags or other rollout mechanisms. But while we were designing for developers and system operators, our product managers approached us with a feature request of their own—a configuration item lifecycle.

From the product managers’ point of view, the lifecycle begins when a configuration is introduced, then passes through events, such as internal availability, limited availability, general availability, maturity, and, sometimes, removal of the option to revert the configuration back. The ability to remove a configuration is just as important as adding one. It allows developers to remove the associated code branches that impact all the coding and testing activities.

To aid with this, we allowed the product managers to define a specific timeline per configuration item, such as limited availability, general availability, force enablement, and maturity. Those could be defined post-hoc, i.e., persisted into the database directly at runtime, since the picture often changes after the code has been delivered.

In addition, we created a way to track which keys are in use. This was a hard problem to crack because, as my professors like to say, “essentially it can be reduced to the halting problem.”

But in this case, it was easy to see this was actually true: How can you know whether the product contains a call to a specific key just by observing the calls it made? That call might hide in a branch that’s rarely invoked, and if you remove the key too early, you can crash your product.

Eventually we settled on building a “key access history.” Every key access would touch the “key last accessed” field. Eventually, after a few months of no activity, we could hypothesize that the key is indeed not in use. A report would be generated, product managers would sign (if needed), and developers would remove the deprecated key and all related code branches.

Interestingly, we had to exclude “scanning” key activity from such key access history. In essence, any code that scanned all keys (or a subset of them) and then accessed them one-by-one could not touch the last access time field.

Did We Stick the Landing?

Ultimately, we were able to build a system that all our stakeholders bought into.

As of this post, there are more than 1,300 keys in our production system, out of which 170 completed their full life cycle and were deprecated. On average, 15 new keys are registered every week. It has become our standard procedure to ask “did you put a flag” on every feature and every non-trivial piece of code.

More than700,000 configuration change operations were executed since the initial deployment, with the pace accelerating. Our project managers and SREs are well trained and executed the vast majority of those without any developer interaction.